Technical SEO will improve the crawlability and UX of your website.

Technical SEO refers to HTML or server optimizations made to web properties that allow search engine spiders to better crawl and index content.

Technical SEO improves the crawlability of websites, increasing the chances that their content will be discovered and indexed by search engines.

Additionally, technical SEO can improve the user experience and thus, the overall visibility the website in SERP. For example, repairing several broken links on a website may improve the ranking of certain pages for target keywords, as search engines will appreciate the improved user experience that has been created.

Search engines tend to reward for great user experiences, and since many technical optimizations can improve the use experience, they can also improve positions in SERP, and thus increase organic traffic.

Technical search engine optimization is a logical first step in an SEO checklist, as you will want to ensure search engines respect your website health before you focus on other ranking factors such as new content creation or on-page SEO.

See Google’s Search Essentials to learn more about the minimal technical requirements to be indexed on Google Search.

Typically, a well technically optimized website will include several characteristics such as page speed comparable to top competitors, clean URL structure that matches content buckets, and no issues with crawl budget.

Page speed or load speed is in reference to the overall load time of any given web page. This is important, because crawlers, like human users, won’t wait long for pages to load before moving on.

If a crawler can’t get a page to load in a reasonable time, it may not crawl the page’s contents, but the attempt to crawl the page will count towards the site’s crawl budget. This means poor page speed can squander crawl budget.

Also, the page speed drastically improves the user-friendly feeling of a website. Users will no longer wait multiple seconds for pages to load. If pages speed is poor, website conversion actions will suffer.

Learn how Google quantifies page speed and try using PageSpeed Insights to learn more.

Its important for URL structure to match the content map or site architecture of your website. Subtopics should be nested under parent pages. This will drastically increase a crawler’s ability to interpret your site’s content as you so desire.

For example: /digital-marketing/seo/technical or /menu/cheeseburgers/bacon-burger

The term “crawlability” refers to the ease at which search engine spider bots can crawl a website. There are several aspects affecting the crawlability of a website. Below we will outline the primary considerations:

The robots.txt file gives search engines instructions on which page should and should not be crawled. However, its important to note this file only offer requests, it is up to the search engine to honor these requests.

It is also important to note Google now suggests utilizing robots meta tags instead of the robots.txt directive.

Robots meta tags, like the robots.txt file provide requests to search engines about the crawling and indexing of a page. However, robots meta tags are installed on a page-by-page basis, living the the <head> of HTML documents.

Eg: <meta name=”robots” content=”noindex” />

An XML sitemap is an XML file that lists the pages of a website.

It is best practice to keep your up-to-date sitemap links listed within Google Search Console and Bing Webmaster Tools.

It is also a best practices to list your sitemaps within your robots.txt file, as this file is often the first stop of a new crawl.

Most websites don’t have to worry about crawl budget, as they aren’t large enough for it to be a concern.

However, larger websites may find themselves needing to pay attention to how many pages are being crawled on a daily bases. If this number falls below 10% of the total website pages, their is an insufficient daily crawl budget in place, and steps should be taken to mitigate.

301 and 302 redirects can be excellent SEO tools when URLs change. However, allowing too many redirects to live in copy can squander crawl budget because each redirect counts as a page toward the crawl budget.

For this reason, redirects should just be the first step when URLs change. Afterward, the updated links should be replaced on-page so that a redirect isn’t necessary upon click.

Eliminate instances of duplicate content to make it easier on crawlers to determine proper canonicals.

Use canonical tags to preserve crawl budget. This can be particularly helpful for e-commerce websites that have several versions of one link, due to custom sizes or colors of a product.

Technically healthy websites don’t host broken links.

Hosting broken links presents three primary problems: 1) it squanders crawl budget and 2) it provides a poor user experience, which can in turn 3) worsen visibility in SERP.

Ample internal linking can improve crawl budget by providing additional paths for the search engine crawlers.

It is also imperative to avoid “orphaned pages”, which are pages that have no internal backlinks – meaning no other page on the website is linking to the orphaned page. Orphaned pages can only be discovered by crawlers through a sitemaps.

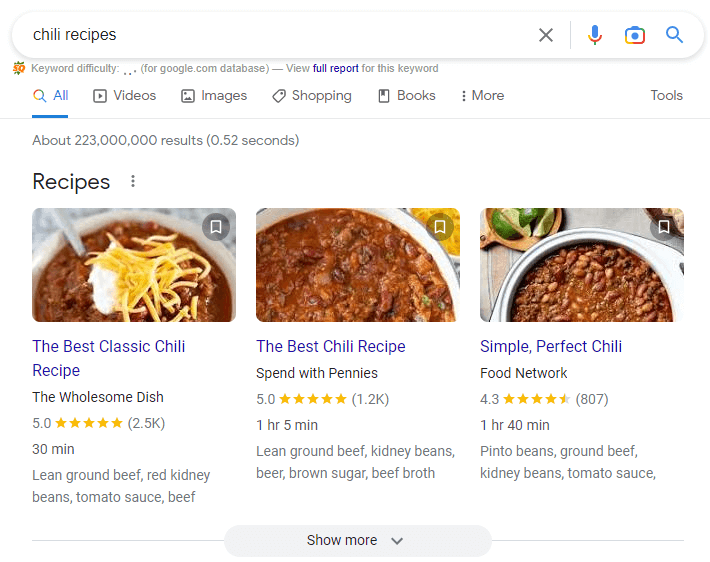

Structured data can be used to add additional information that search engines can interpret to better understand a web page’s contents.

Using structured data helps search engines to create rich snippets in SERP that provide additional insight about a link’s contents.

Think of when you search for recipe or a video online, the results tend to look different. If you search for a recipe, you will likely see a string of recipes in SERP with star ratings and their ingredients listed. Those recipe pages are using structured data.

This type of structured data is from the open source project found on Schema.org.

We commonly use FAQpage Schema to win client’s placements in the “People also ask” section of Google Search results.

This structured data is designed by Twitter, and it can be used attach rich photos or videos from tweets to a website.

This structured data is designed by Facebook, however it is used in conjunction many social networks to display rich objects in the social graph.

Hreflang tags are HTML attributes that help search engines understand the relationship between webpages written in alternate languages, and how to use them based on geographical targeting.

If you have an international audience, the proper use of hreflang tags will be essential for serving the appropriate version of your website to your target audience.

For this reason, hreflang tags play a vital role in international digital marketing.

If websites are going to collect information from users, they will need to encrypt the data with the use of an SSL (secure socket layer).

Websites that don’t have an SSL certificate, often see an immediate boost in SERP rankings after adding one. This is because Google wants to create a safe web for users, so they promote the use of SSL with SEO reward.

Meta descriptions work in correlations with strong titles to present the value offering to search engine users. A strong meta description and title will improve the page’s click-through rate.

Alternatively, if you have missing, long, or short meta descriptions or titles, this can present technical SEO issues. Also missing meta descriptions, as it will often entice search engines to create their own meta description for your page, so for maximum control you need to be writing descriptions. The number one reason to write your own meta descriptions is so you can include a CTA (call-to-action).

Professional technical SEO services will analyze web properties with several types of technical SEO audits. Technical SEO strategies are then developed based on the results of the audits, and ultimately the service will address all outlined repairable issues.

After initial optimizations, technical SEO services will monitor and repair new issues as they arise.

We include technical SEO services with all of our SEO campaigns. In fact, technical optimizations are our almost always our first step in any SEO campaign.

Learn more about technical SEO and the optimization needs of your website.

Schedule a complimentary consultation with one of our SEO experts.